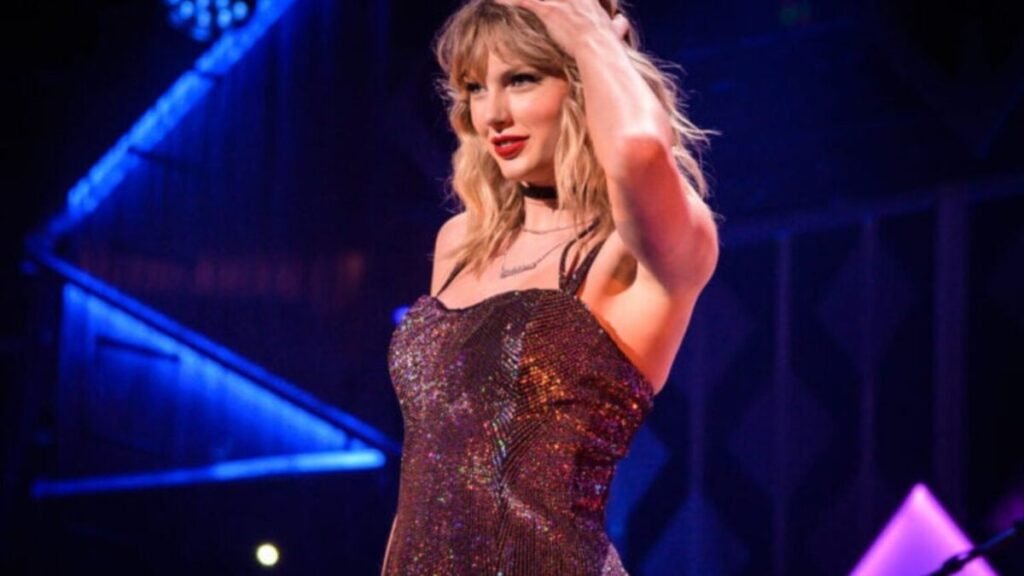

Meta’s stocks plummet as news breaks that they used Taylor Swift without permission as a chatbot

Meta sparked a storm when users of chatbots created by the company used several celebrities, including Taylor Swift, without permission on platforms like Facebook, Instagram, and WhatsApp.

The company’s stock fell more than 12% in after-hours trading when the news spread.

It is also said that images of Scarlett Johansson, Anne Hathaway, and Selena Gomez went viral.

Many of these fake personalities created with AI engaged in seductive or sexual conversations, causing great concern.

Although many of the bots were generated by users, Reuters revealed that at least three had been created by a person employed by Meta, and of those three, two were of the supposed Taylor Swift. Before being removed, the bots totaled more than 10 million interactions with users.

Unauthorized, the fans’ fury

Presented as “parodies,” the bots violated Meta’s policies, particularly the prohibition of impersonating people and including sexually suggestive images. Some adult bots even featured celebrities in underwear or in a bathtub, and a chatbot representing a 16-year-old actor generated an inappropriate image with a bare torso.

Meta spokesperson Andy Stone told Reuters that the company attributes this violation of the rules to enforcement failures, ensuring that the company plans to tighten its guidelines.

“Like others, we allow the generation of images containing public figures, but our policies aim to prohibit intimate, sexually suggestive, or nude images,” he said.

Legal risks and industry alarm

The unauthorized use of celebrity images raises legal concerns, particularly under state rights-to-publicity laws. Stanford law professor Mark Lemley suggested that the bots likely went too far, exceeding what was permissible, as the transformation did not warrant legal protection.

The issue is part of a broader ethical dilemma regarding AI-generated content. SAG-AFTRA expressed concerns about the implications for real-world safety, especially if users form emotional attachments to digital characters that appear real.

Meta acts but falls short

In response to the scandal, Meta removed several of these bots shortly after Reuters made public what it had found.

At the same time, the company announced new safeguards aimed at protecting teenagers from inappropriate interactions with chatbots. The company stated that it is training its systems to avoid topics like romance, suicide, or self-harm in interactions with minors, as well as temporarily limiting teenagers’ access to certain AI characters.

U.S. lawmakers also reacted, with Senator Josh Hawley leading an investigation demanding internal documents and risk assessments regarding AI policies that allowed for romantic conversations with minors.

Tragic consequences in the real world

One of the most chilling effects was the death of a 76-year-old man with cognitive impairment. He had tried to meet “Big sis Billie,” a Meta AI chatbot inspired by Kendall Jenner.

Believing she was real, the man traveled to New York, had a serious fall near a train station, and later died. The guidelines that used to allow bots to simulate romance – even with minors – raised Meta’s scrutiny.

This article has been translated from Gizmodo US by Lucas Handley. You can find the original version.