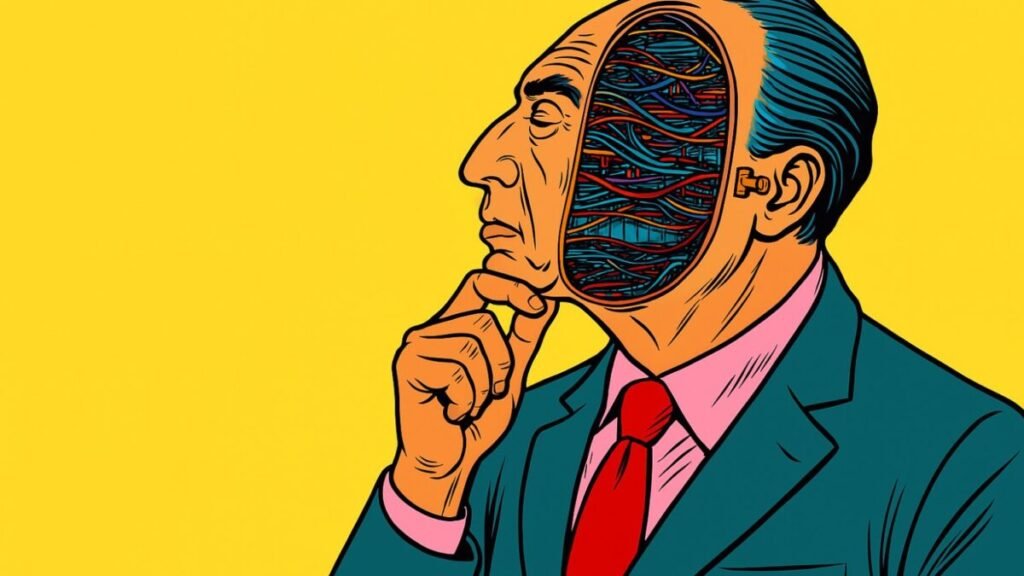

The Invisible Trap of AI: How models like ChatGPT simulate understanding without truly comprehending

However, a recent study by researchers from MIT, Harvard, and Chicago shows the opposite: large language models build a facade that allows them to respond coherently without true understanding.

What is the Potemkin Understanding

The study refers to the phenomenon in which an AI seems to master a concept, but fails when applying it. The term alludes to Potemkin villages, facades erected to impress the Russian Empress Catherine the Great. In this case, these facades are flawless definitions that hide a real lack of understanding.

They found that the models correctly define concepts 94.2% of the time, but their performance drops when they have to use them: they fail up to 55% in classifying them, and 40% in generating examples.

The Illusion of Applied Knowledge

They are “non-human misunderstandings” that reflect a deep incoherence in how AIs structure their conceptual representation. A model may seem brilliant and still make mistakes with basic tasks that a human would solve effortlessly.

What is most concerning is that these tests reveal that exams designed for humans do not measure the real understanding of AIs.

How do they differ from hallucinations?

Factual errors that seem real are already well known. But Potemkin Understanding is another, deeper problem: if hallucinations invent facts, Potemkins manufacture a coherence that doesn’t exist. While the former can be verified with data, detecting the latter requires unraveling the internal contradictions of the model.

According to the authors, this affects models like GPT-4o, Gemini, Claude, Llama, or systems from Alibaba and DeepSeek equally.

A common challenge for all artificial intelligence subjected AIs to 32 tests of deep understanding, from literary resources to cognitive biases and game theory. In all of them, the models demonstrated their ability to explain, but not to reason.

The conclusion is clear: real understanding — the one that allows applying what is known — remains an obstacle that current systems have not overcome. We need them to stop pretending to understand and start truly understanding.